Lab 11: Localization on the real robot

Objective

The goal of this lab is to implement the Bayes Filter on the real robot and have it do grid localization. We implement only the update step based on the 360 degree scans due to the robot's motion being so noisy that it doesn't help to do the prediction step.

Prelab

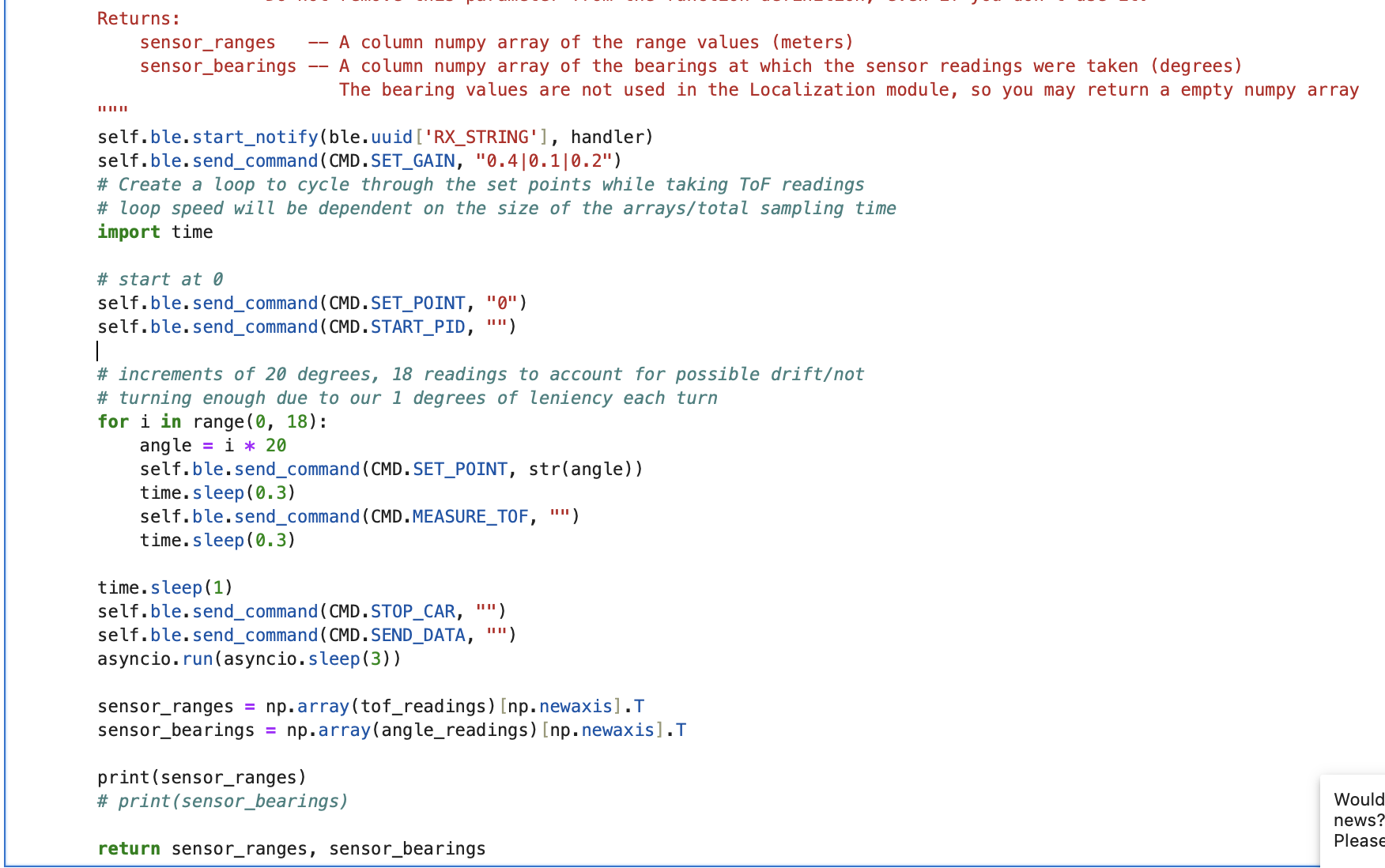

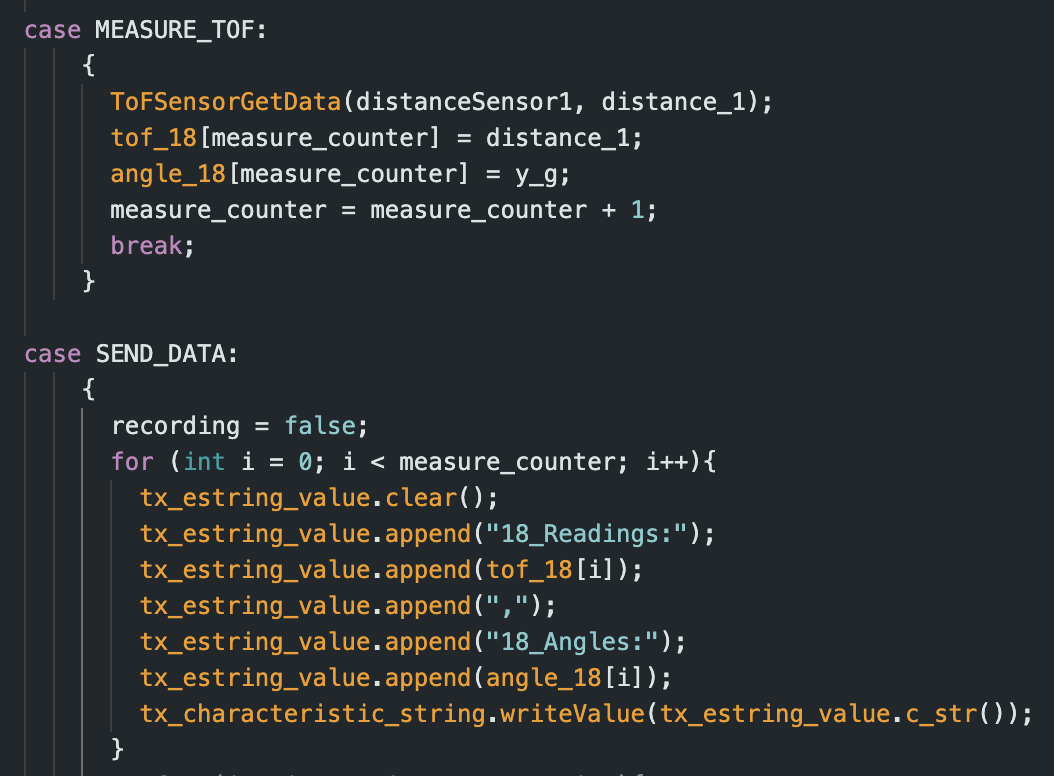

For this lab, we are given the optimized code to implement the Bayes Filter on the virtual robot. We simply need to modify the code to work with the real robot. We mainly need to gather the 360 degree rotation and ToF data and pipeline it into the Bayes Filter code. As a sanity test, we ran the simulation code with the optimized Bayes Filter to ensure that it did in fact work in simulation.

Implementation on Real Robot

The implementation was quite straightforward as my code from Lab 9 had the 360 degree rotation completed in 20 degree increments. I copied over the required base code and added the bluetooth code from lab 9. I did modify both the arduino and python code slightly to take only 1 reading per rotation. In lab 9, I took readings continuously, so there was much more than 18 readings. The general loop now is that the robot rotates, waits a certain delay, takes a reading, waits another delay, then rotates again. This made it so that there were 18 distinct readings.

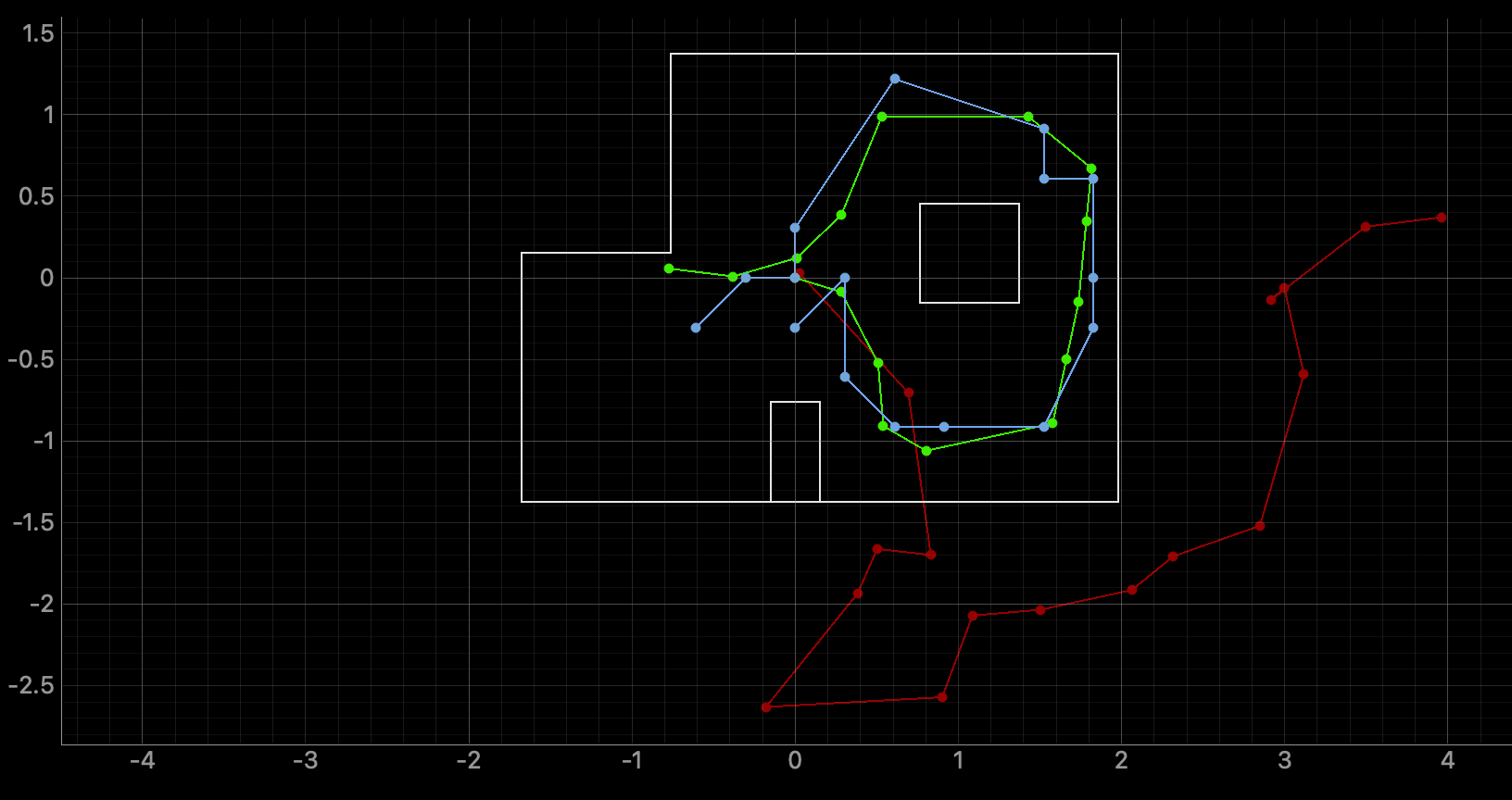

Results on Real Localization

Below are the visualized localization at the 4 keypoints. In general, all localization was correct, but it took a few trials as I have not standardized the 0 degree angle as the same orientation every trial. The scale is in meters rather than feet. I omit a video of the data collection as it is the same as lab 9 where the robot spins in a circle and is uninteresting.

(-3, -2)

; (-3, -2).png)

(0, 3)

; (0, 3).png)

(5, -3)

; (5, -3).png)

(5, 3)

; (5, 3).png)